Patching Oracle Exalogic - Updating the ZFS 7320 Storage Appliance II

Published on: Author: Jos Nijhoff Category: OraclePart 3b

In my previous post we checked the current software versions on the storage, started the rolling upgrade proces for the ZFS 7320 storage appliance and upgraded the ILOM of storage head2. Now we will finish what we started by performing step 3, upgrading the storage software to version 2011.1.1.0, which is needed for Exalogic versions 1.0.0.0.5 and 2.0.0.0.0.

1.6 Upgrading storage head 2 continued

Let’s see where we were in the upgrade guide, section 2.4.3:

- Step 3 : Upgrading ZFS Storage 7320 Software to version 2011.1.1.0.

This section describes how to upgrade the ZFS Storage 7320 software to version 2011.1.1.0 (2011.04.24.1.0,1-1.8). Ensure that the storage head is running version 2010.Q3.2.1 (2010.08.17.2.1,1-1.21) or higher before proceeding with upgrade to 2011.1.1.0. Also, Ensure that the ILOM is upgraded, and ILOM/BIOS is running the version 3.0.16.10, build r65138, before applying this software update.

To upgrade the ZFS storage 7320 software to version 2011.1.1.0, complete the following steps:

1. Log in to ILOM of the storage head where you updated the ILOM, as root:

2. Check if this storage head has network connectivity.

3. Run the following command:

Ensure that you have created the patches share on the Sun ZFS Storage 7320 appliance, and enabled the FTP service on the share with the permission for root access. Configure to download the new software from <ftp URL to ak-nas-2011-04-24-1-0-1-1-8-nd.pkg.gz> by using the set url, set user, and set passwordcommands as follows:

Then select the newly downloaded version and start the upgrade:

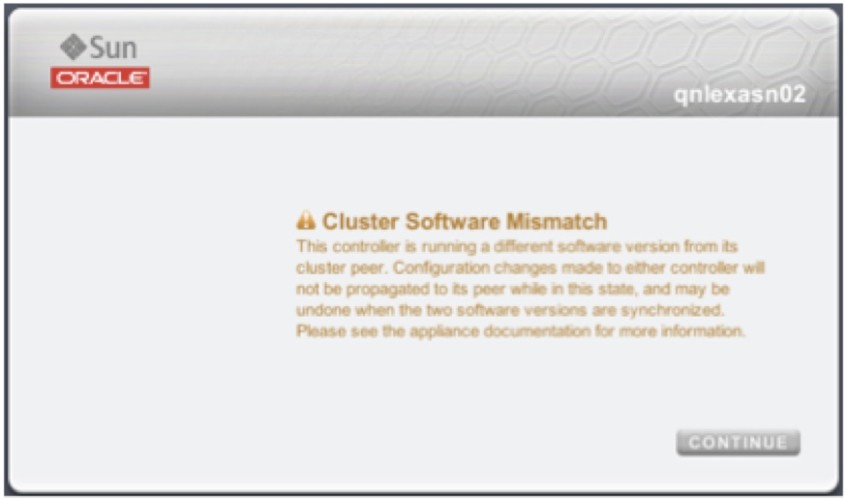

OK, we have now upgraded the storage software on storage head2. Now log back onto it’s CLI console. We see a warning that the machine is running two different software versions now (as we have not upgraded the active head 1 yet).

This message is also shown when logging in to the storage webinterfaces (figure 3), as a reminder that we should not leave it at this:

Check the current version:

OK, storage head 2 is ready and we should now do a switchover to free head 1 from active duty and upgrade it next.

1.7 Doing the switchover between heads 1 and 2

The document says only this:

Now you can perform a takeover operation, as required, depending on your choice of storage head to serve as the active storage head. Ensure that one of the storage heads is in the Active (takeover completed) state, and the other is in the Ready (waiting for failback) state. This process completes software upgrade on the ZFS storage appliance.

OK, but how can we perform the takeover from 1 to 2? The takeover can be done via the webGUI, which I will demonstrate in a future post. Here’s how to do it from the CLI:

Now the storage head 1 will restart and join the cluster again, now as the active backup. After waiting a little (30 sec or so), check status again:

Now check the status on head 1 as well :

Now that we have done the takeover we can perform the same upgrade steps 2 and 3 on head 1 as well, first the ILOM upgrade (see previous post) and then the storage upgrade. As it’s the same routine as before, thus I will not show it here.

1.8 Conclusion

We have demonstrated that we can upgrade both the network and the storage infrastructure in our Exalogic quarter rack in a rolling fashion, without interrupting these services and maintaining availability!

1.9 Next time

In a following post, we move on the next part of patching the Exalogic infrastructure: upgrading the OS image on the compute nodes.

Publicatiedatum: 29 augustus 2012